spkit.entropy_sample¶

- spkit.entropy_sample(x, m, r)¶

Sample Entropy \(SampEn(X)\) or \(H_{se}(X)\)

Sample entropy is more suited for temporal source, (non-IID), such as physiological signals, or signals in general. Sample entropy like Approximate Entropy (

entropy_approx) measures the complexity of a signal by extracting the pattern of m-symbols.mis also called as embedding dimension.ris the tolarance here, which determines the two patterns to be same if their maximum absolute difference is thanr.Sample Entropy avoide the self-similarity between patterns as it is considered in Approximate Entropy

- Parameters:

- X1d-array

as signal

- mint

- embedding dimension, usual value is m=3, however, it depends on the signal.

If signal has high sampling rate, which means a very smooth signal, then any consequitive 3 samples will be quit similar.

- rtolarance

usual value is r = 0.2*std(x)

- Returns:

- SampEnfloat - sample entropy value

See also

entropy_approxApproximate Entropy

dispersion_entropyDispersion Entropy

entropy_spectralSpectral Entropy

entropy_svdSVD Entropy

entropy_permutationPermutation Entropy

entropy_differentialDifferential Entropy

entropyEntropy

Notes

Computationally and otherwise, Sample Entropy is considered better than Approximate Entropy

References

Examples

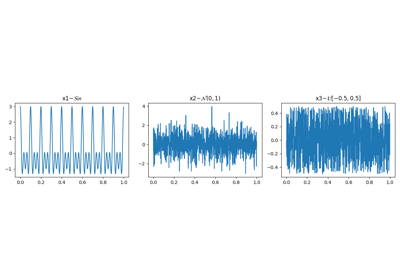

>>> #sp.entropy_sample >>> import numpy as np >>> import spkit as sp >>> t = np.linspace(0,2,200) >>> x1 = np.sin(2*np.pi*1*t) + 0.1*np.random.randn(len(t)) # less noisy >>> x2 = np.sin(2*np.pi*1*t) + 0.5*np.random.randn(len(t)) # very noisy >>> #Sample Entropy >>> H_x1 = sp.entropy_sample(x1,m=3,r=0.2*np.std(x1)) >>> H_x2 = sp.entropy_sample(x2,m=3,r=0.2*np.std(x2)) >>> print('Sample entropy') >>> print('Entropy of x1: SampEn(x1)= ',H_x1) >>> print('Entropy of x2: SampEn(x2)= ',H_x2) Sample entropy Entropy of x1: SampEn(x1)= 0.6757312057041359 Entropy of x2: SampEn(x2)= 1.6700625342505353