spkit.entropy_spectral¶

- spkit.entropy_spectral(x, fs, method='fft', alpha=1, base=2, normalize=True, axis=-1, nperseg=None, esp=1e-10)¶

Spectral Entropy \(H_f(X)\)

Measure of the uncertainity of frequency components in a signal. Spectral entropy a signal drawn from uniform distribution will be usually quite similar to one that is drawn from gaussian distrobutation. Since both have almost flat-like spectrum.

\[H_f(x) = H(F(x))\]\(F(x)\) - FFT of x

- Parameters:

- x: 1d array

- fs = sampling frequency

- method: ‘fft’ use periodogram and ‘welch’ uses wekch method

- base: base of log, 2 for bit, 10 for nats

- Returns:

- H_fx: scalar

Spectral entropy

See also

entropyEntropy

entropy_sampleSample Entropy

entropy_approxApproximate Entropy

dispersion_entropyDispersion Entropy

entropy_svdSVD Entropy

entropy_permutationPermutation Entropy

entropy_differentialDifferential Entropy

Examples

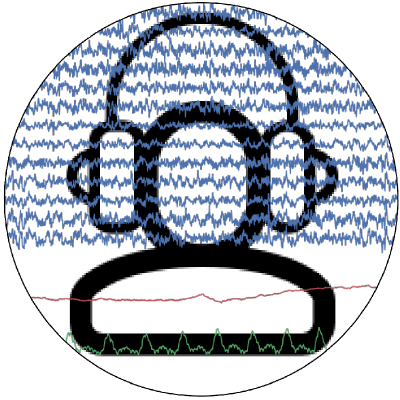

>>> sp.entropy_spectral >>> import numpy as np >>> import matplotlib.pyplot as plt >>> import spkit as sp >>> np.random.seed(1) >>> fs = 1000 >>> t = np.arange(1000)/fs >>> x1 = np.random.randn(len(t)) >>> x2 = np.cos(2*np.pi*10*t)+np.cos(2*np.pi*30*t)+np.cos(2*np.pi*20*t) >>> Hx1 = sp.entropy(x1) >>> Hx2 = sp.entropy(x2) >>> Hx1_se = sp.entropy_spectral(x1,fs=fs,method='welch',normalize=False) >>> Hx2_se = sp.entropy_spectral(x2,fs=fs,method='welch',normalize=False) >>> print('Spectral Entropy:') >>> print(r' - H_f(x1) = ',Hx1_se,) >>> print(r' - H_f(x1) = ',Hx2_se) >>> print('Shannon Entropy:') >>> print(r' - H(x1) = ',Hx1) >>> print(r' - H(x1) = ',Hx2) >>> print('-') >>> Hx1_n = sp.entropy(x1,normalize=True) >>> Hx2_n = sp.entropy(x2,normalize=True) >>> Hx1_se_n = sp.entropy_spectral(x1,fs=fs,method='welch',normalize=True) >>> Hx2_se_n = sp.entropy_spectral(x2,fs=fs,method='welch',normalize=True) >>> print('Spectral Entropy (Normalised)') >>> print(r' - H_f(x1) = ',Hx1_se_n,) >>> print(r' - H_f(x1) = ',Hx2_se_n,) >>> print('Shannon Entropy (Normalised)') >>> print(r' - H_f(x1) = ',Hx1_n) >>> print(r' - H_f(x1) = ',Hx2_n) >>> np.random.seed(None) >>> plt.figure(figsize=(11,4)) >>> plt.subplot(121) >>> plt.plot(t,x1,label='x1: Gaussian Noise',alpha=0.8) >>> plt.plot(t,x2,label='x2: Sinusoidal',alpha=0.8) >>> plt.xlim([t[0],t[-1]]) >>> plt.xlabel('time (s)') >>> plt.ylabel('x1') >>> plt.legend(bbox_to_anchor=(1, 1.2),ncol=2,loc='upper right') >>> plt.subplot(122) >>> label1 = f'x1: Gaussian Noise \n H(x): {Hx1.round(2)}, H_f(x): {Hx1_se.round(2)}' >>> label2 = f'x2: Sinusoidal \n H(x): {Hx2.round(2)}, H_f(x): {Hx2_se.round(2)}' >>> P1x,f1q = sp.periodogram(x1,fs=fs,show_plot=True,label=label1) >>> P2x,f2q = sp.periodogram(x2,fs=fs,show_plot=True,label=label2) >>> plt.legend(bbox_to_anchor=(0.4, 0.4)) >>> plt.grid() >>> plt.tight_layout() >>> plt.show() Spectral Entropy: - H_f(x1) = 6.889476342103717 - H_f(x1) = 2.7850662938305786 Shannon Entropy: - H_f(x1) = 3.950686888274901 - H_f(x1) = 3.8204484006660255 - Spectral Entropy (Normalised) - H_f(x1) = 0.9826348642134711 - H_f(x1) = 0.3972294995395931 Shannon Entropy (Normalised) - H_f(x1) = 0.8308686349524237 - H_f(x1) = 0.8445663920995877