spkit.entropy¶

- spkit.entropy(x, alpha=1, base=2, normalize=False, is_discrete=False, bins='fd', return_n_bins=False, ignoreZero=False, esp=1e-10)¶

Entropy \(H(X)\)

Given a sequence or signal x (1d array), compute entropy H(x).

If ‘is_discrete’ is true, given x is considered as discreet sequence and to compute entropy, frequency of all the unique values of x are computed and used to estimate H(x), which is straightforward.

However for real-valued sequence (is_discrete=False), first a density of x is computed using histogram method, and using optimal bin-width (Freedman Diaconis Estimator), as it is set to bins=’fd’.

- Rényi entropy of order α (generalised form) alpha:[0,inf]

- alpha = 0: Max-entropy

\(H(x) = log(N)\) where N = number of bins

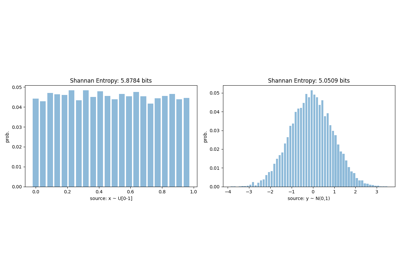

- alpha= 1: Shannan entropy

\(H(x) = -\sum{P(x)*log(P(x))}\)

- alpha = 2 or ..Collision entropy or Rényi entropy

\(H(x) = 1/(1-α)*log{\sum{P(x)^α}}\)

- alpha = inf:Min-entropy:

\(H(x) = -log(max(P(x)))\)

- Parameters:

- x1d array

input sequence or signal

- is_discrete: bool, default=False.

If True, frequency of unique values are used to estimate H(x)

- alphafloat between 0 to infinity [0,inf], (default=1)

alpha = 1 (default), shannan entropy is computed - \(H(x) = -\sum{Px*log(Px)}\)

alpha = 0, maximum entropy: \(H(x) = log(N)\), where N = number of bins

alpha = ‘inf’ or np.inf, minimum entropy, \(H(x) = -log(max(Px))\)

for any other value of alpha, Collision entropy or Rényi entropy, \(H(x) = 1/(1-α)*log{\sum{Px^α}}\)

- base: base of log, (default=2)

decides the unit of entropy

if base=2 (default) unit of entropy is in bits, base=e, nats, base=10, bans

- .. versionadded:: 0.0.9.5

- normalize: bool, default = False

if true, normalised entropy is returned, \(H(x)/max{H(x)} = H(x)/log(N)\), which has range 0 to 1.

It is useful, while comparing two different sources to enforce the range of entropy between 0 to 1.

- bins: {str, int}, bins=’fd’ (default)

str decides the method of compute bin-width, bins=’fd’ (default) is considered as optimal bin-width of a real-values signal/sequence. check help(spkit.bin_width) for more Methods

if bins is integer, then fixed number of bins are computed. It is useful, while comparing two different sources by enforcing the same number of bins.

- return_n_bins: bool, (default=False)

if True, number of bins are also returned.

- ignoreZero: bool, default =False

if true, probabilities with zero value will be omited, before computations

It doesn’t make much of difference

- Returns:

- HEntropy value

- Nnumber of bins, only if return_n_bins=True

See also

entropy_sampleSample Entropy

entropy_approxApproximate Entropy

dispersion_entropyDispersion Entropy

entropy_spectralSpectral Entropy

entropy_svdSVD Entropy

entropy_permutationPermutation Entropy

entropy_differentialDifferential Entropy

Notes

Examples

>>> import numpy as np >>> import spkit as sp >>> np.random.seed(1) >>> x = np.random.rand(10000) >>> y = np.random.randn(10000) >>> #Shannan entropy >>> H_x = sp.entropy(x,alpha=1) >>> H_y = sp.entropy(y,alpha=1) >>> print('Shannan entropy') >>> print('Entropy of x: H(x) = ',H_x) >>> print('Entropy of y: H(y) = ',H_y) >>> print('') >>> Hn_x = sp.entropy(x,alpha=1, normalize=True) >>> Hn_y = sp.entropy(y,alpha=1, normalize=True) >>> print('Normalised Shannan entropy') >>> print('Entropy of x: H(x) = ',Hn_x) >>> print('Entropy of y: H(y) = ',Hn_y) >>> np.random.seed(None) Shannan entropy Entropy of x: H(x) = 4.458019387223165 Entropy of y: H(y) = 5.043357283463282 Normalised Shannan entropy Entropy of x: H(x) = 0.9996833158270148 Entropy of y: H(y) = 0.8503760993630085