Note

Go to the end to download the full example code or to run this example in your browser via JupyterLite or Binder

Decision Trees with shrinking capability - Regression example¶

Decision Trees with shrinking capability from SpKit (Regression example)

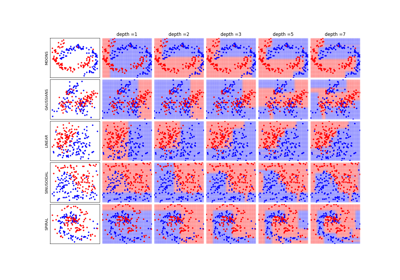

In this notebook, we show the capability of decision tree from *spkit* to analysie the training and testing performace at each depth of a trained tree. After which, a trained tree can be shrink to any smaller depth, *without retraining it*. So, by using Decision Tree from *spkit*, you could choose a very high number for a max_depth (or just choose -1, for infinity) and analysis the parformance (accuracy, mse, loss) of training and testing (practically, a validation set) sets at each depth level. Once you decide which is the right depth, you could shrink your trained tree to that layer, without explicit training it again to with new depth parameter.

spkit version : 0.0.9.7

(442, 10) (442,)

(309, 10) (133, 10) (309,) (133,)

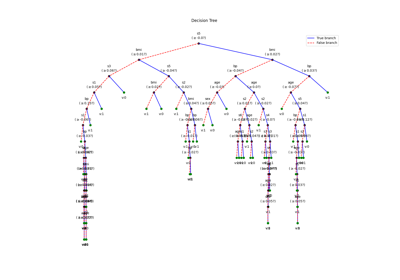

Depth of trained Tree 15

MSE

- Training : 1.0134843581445523

- Testing : 5031.063909774436

MAE

- Training : 0.13052858683926644

- Testing : 53.34586466165413

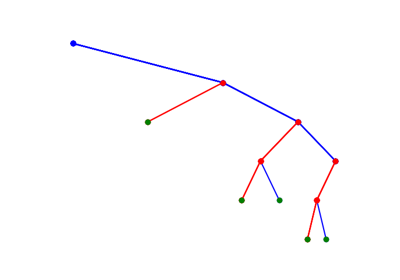

{'measure': 'mae', 1: {'train': 53.9808084354697, 'test': 52.17085185630181}, 2: {'train': 46.86851907385888, 'test': 44.98487507378485}, 3: {'train': 43.141568996938794, 'test': 45.35190431482284}, 4: {'train': 39.356810992674724, 'test': 45.8823993621665}, 5: {'train': 35.00590288867435, 'test': 46.97168350523505}, 6: {'train': 28.592558822370357, 'test': 48.15153853969643}, 7: {'train': 22.47797628054624, 'test': 49.607500193059686}, 8: {'train': 16.2127465465558, 'test': 49.68983123613818}, 9: {'train': 10.612649612164175, 'test': 50.82985738154911}, 10: {'train': 5.7302203729388195, 'test': 50.60221983530255}, 11: {'train': 2.6400749196865703, 'test': 51.43969676939602}, 12: {'train': 1.3022653721682846, 'test': 52.42468671679198}, 13: {'train': 0.7022191400832177, 'test': 53.07196562835661}, 14: {'train': 0.3797195253505933, 'test': 53.54636591478696}, 15: {'train': 0.13052858683926644, 'test': 53.34586466165413}}

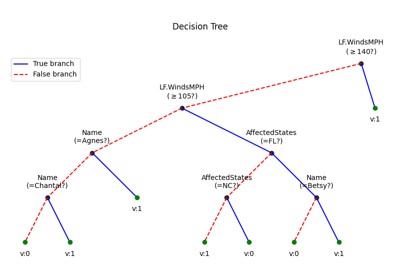

Depth of trained Tree 2

MSE

- Training : 3380.8011201388626

- Testing : 3333.5221115404897

MAE

- Training : 46.86851907385888

- Testing : 44.98487507378485

import numpy as np

import matplotlib.pyplot as plt

from sklearn.model_selection import train_test_split

import spkit

print('spkit version :', spkit.__version__)

# just to ensure the reproducible results

np.random.seed(100)

# Regression - Diabetes Dataset - score

from spkit.ml import RegressionTree

from sklearn.datasets import load_diabetes

data = load_diabetes()

X = data.data

y = data.target

feature_names = data.feature_names

print(X.shape, y.shape)

Xt,Xs,yt,ys = train_test_split(X,y,test_size =0.3)

print(Xt.shape, Xs.shape,yt.shape, ys.shape)

# ### Train with max_depth=15, MSE, MAE

model = RegressionTree(max_depth=15)

model.fit(Xt,yt,feature_names=feature_names)

ytp = model.predict(Xt)

ysp = model.predict(Xs)

print('Depth of trained Tree ', model.getTreeDepth())

print('MSE')

print('- Training : ',np.mean((ytp-yt)**2))

print('- Testing : ',np.mean((ysp-ys)**2))

print('MAE')

print('- Training : ',np.mean(np.abs(ytp-yt)))

print('- Testing : ',np.mean(np.abs(ysp-ys)))

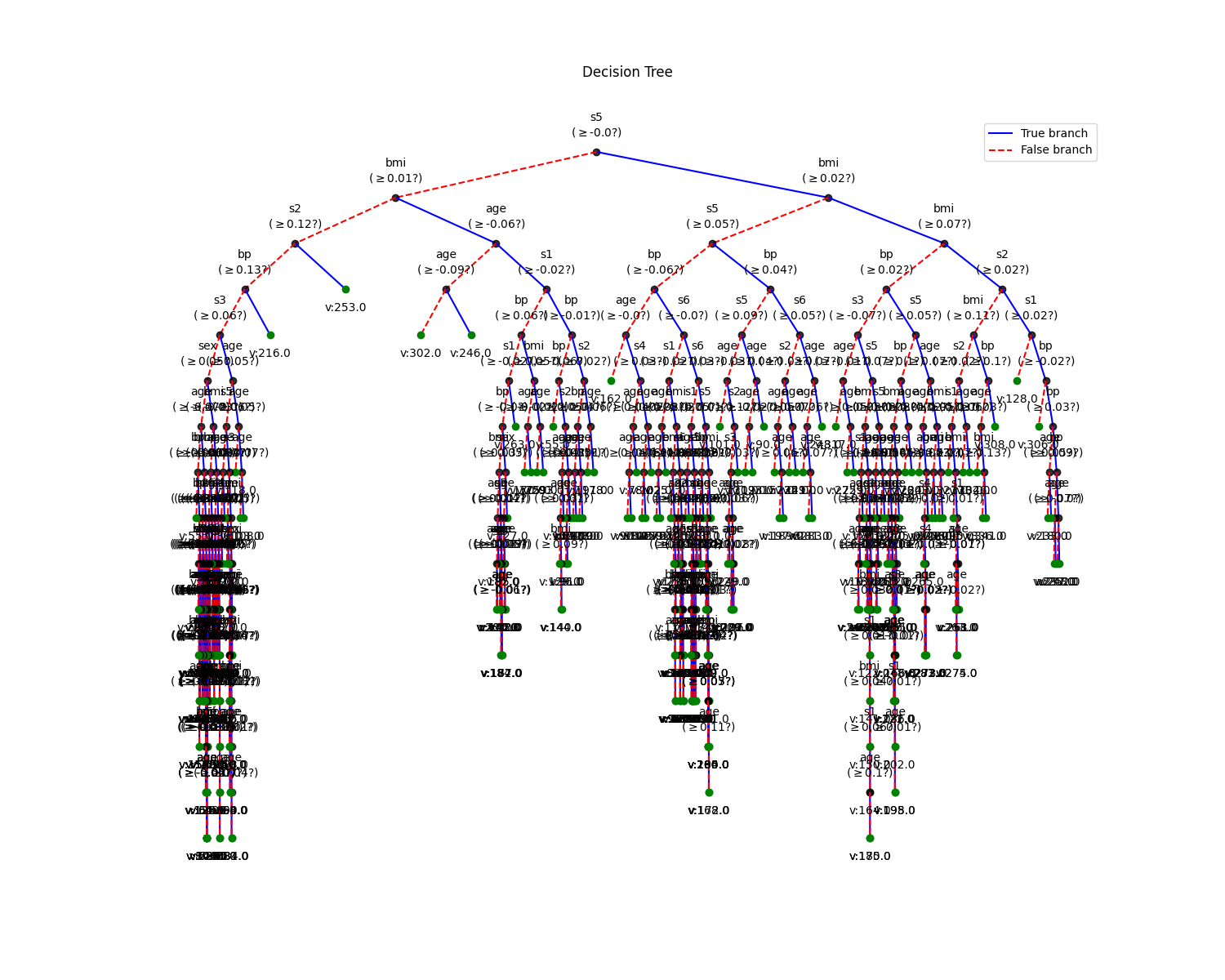

# Plot trained Tree

plt.figure(figsize=(15,12))

model.plotTree()

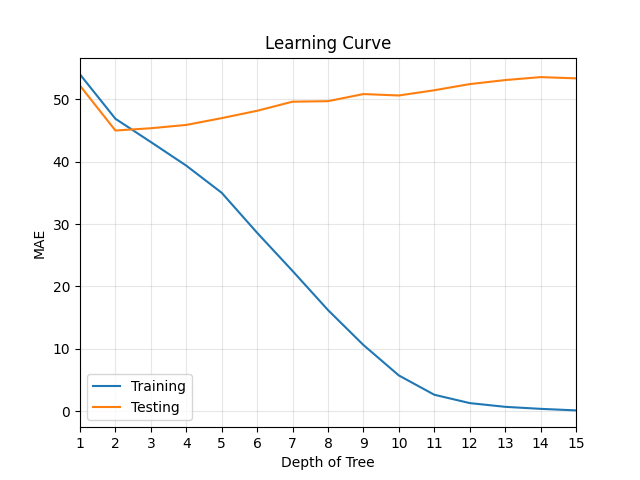

# Analysing the Learning Curve and Tree with MAE

Lcurve = model.getLcurve(Xt=Xt,yt=yt,Xs=Xs,ys=ys,measure='mae')

print(Lcurve)

model.plotLcurve()

plt.xlim([1,model.getTreeDepth()])

plt.xticks(np.arange(1,model.getTreeDepth()+1))

plt.show()

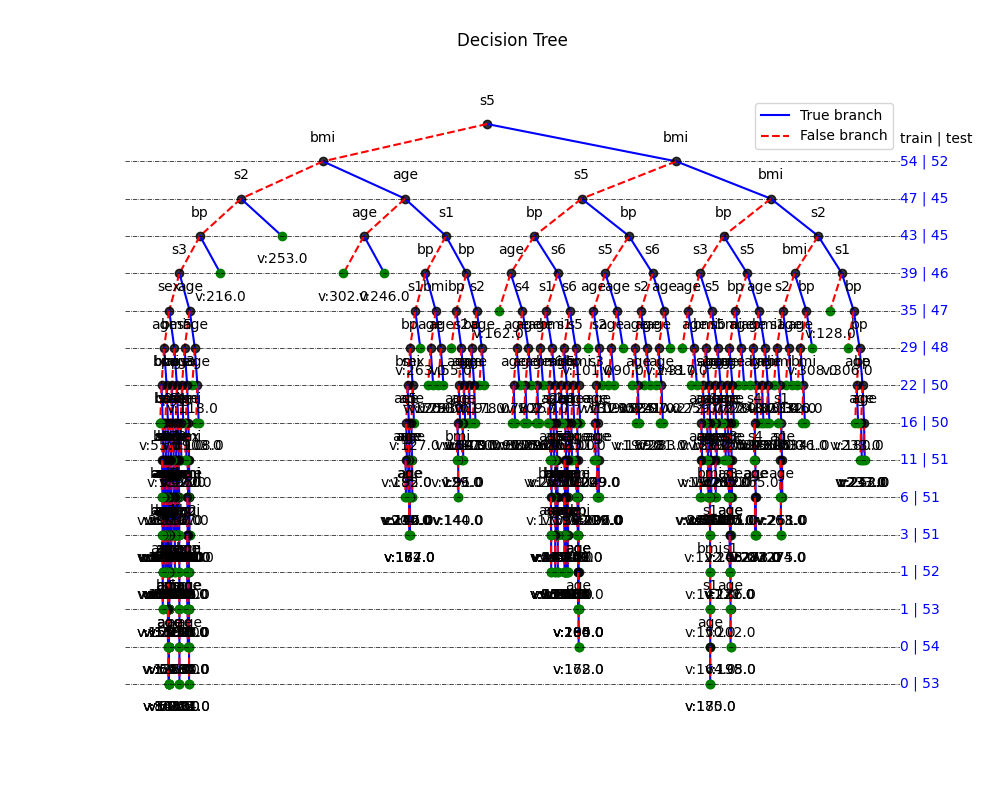

plt.figure(figsize=(10,8))

model.plotTree(show=False,Measures=True,showNodevalues=True,showThreshold=False)

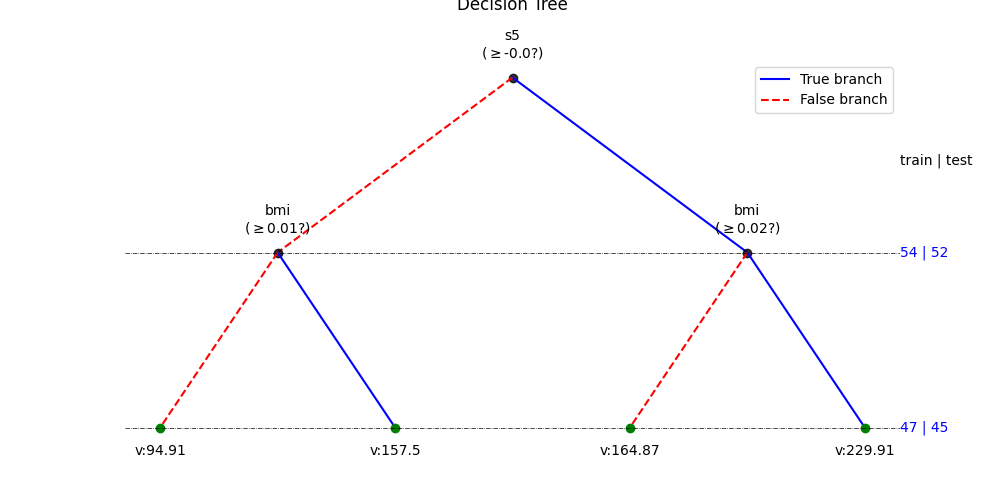

# Shrining a trained Tree to depth=2

model.updateTree(shrink=True,max_depth=2)

ytp = model.predict(Xt)

ysp = model.predict(Xs)

print('Depth of trained Tree ', model.getTreeDepth())

print('MSE')

print('- Training : ',np.mean((ytp-yt)**2))

print('- Testing : ',np.mean((ysp-ys)**2))

print('MAE')

print('- Training : ',np.mean(np.abs(ytp-yt)))

print('- Testing : ',np.mean(np.abs(ysp-ys)))

plt.figure(figsize=(10,5))

model.plotTree(show=False,Measures=True,showNodevalues=True,showThreshold=True)

Total running time of the script: (0 minutes 2.326 seconds)

Related examples

Decision Trees with shrinking capability - Classification example

Decision Trees with visualisations while buiding tree

Decision Trees without converting Catogorical Features