spkit.mutual_info¶

- spkit.mutual_info(x, y, base=2, is_discrete=False, bins='fd', return_n_bins=False, verbose=False, ignoreZero=False)¶

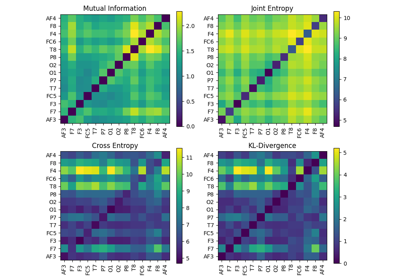

Mututal Information \(I(X;Y)\)

\[I(X;Y) = H(X)+H(Y)-H(X,Y)\]\[I(X;Y) = H(X) - H(X|Y)\]\[0 <= I(X;Y) <= min\{ H(x), H(y)\}\]- Parameters:

- x,y1d-arrays

- is_discrete: bool, default=False.

If True, frequency of unique values are used to estimate I(x,y)

- base: base of log,

decides the unit of entropy

if base=2 (default) unit of entropy is in bits, base=e, nats, base=10, bans

- bins: {str, int, [int, int]}.

str decides the method of compute bin-width, bins=’fd’ (default) is considered as optimal bin-width of a real-values signal/sequence.

check

bin_widthfor more Methodsif bins is an integer, then fixed number of bins are computed for both x, and y.

if bins is a list of 2 integer ([Nx, Ny]),then Nx and Ny are number of bins for x, and y respectively.

- return_n_bins: bool, (default=False)

if True, number of bins are also returned.

- ignoreZero: bool, default=False

if true, probabilities with zero value will be omited, before computations

It doesn’t make much of difference

- Returns:

- IMutual Information I(x,y)

- (Nx, Ny)tuple of 2

number of bins for x and y, respectively (only if return_n_bins=True)

See also

entropy_jointJoint Entropy

entropy_condConditional Entropy

entropy_kldKL-diversion Entropy

entropy_crossCross Entropy

Examples

>>> #sp.mutual_info >>> import numpy as np >>> import matplotlib.pyplot as plt >>> import spkit as sp >>> np.random.seed(1) >>> x = np.random.randn(1000) >>> y1 = 0.1*x + 0.9*np.random.randn(1000) >>> y2 = 0.9*x + 0.1*np.random.randn(1000) >>> I_xy1 = sp.mutual_info(x,y1) >>> I_xy2 = sp.mutual_info(x,y2) >>> print(r'I(x,y1) = ',I_xy1, '\t| y1 /= e x') >>> print(r'I(x,y2) = ',I_xy2, '\t| y2 ~ x') >>> np.random.seed(None) I(x,y1) = 0.29196123466326007 | y1 /= e x I(x,y2) = 2.6874431530714116 | y2 ~ x